The term ‘data’ is not new to us. It is one of the primary things taught when you opt for Information Technology and computers. If you can recall, data is considered the raw form of information. Though already there for a decade, the term Big Data is a buzz these days. As evident from the term, loads, and loads of data, is Big Data and it can be processed in different ways using different methods and tools to procure required information. This article talks about the concepts of Big Data, using the 3 V’s mentioned by Doug Laney, a pioneer in the field of data warehousing which is considered to have initiated the field of Infonomics (Information Economics).

Before you proceed, you might want to read our articles on the Basics of Big Data and Big Data Usage to grasp the essence. They might add up to this post for further explanation of Big Data concepts.

Big Data 3 Vs

Data, in its huge form, accumulated via different means was filed properly in different databases earlier and was dumped after some time. When the concept emerged that the more the data, the easier it is to find out – different and relevant information – using the right tools, companies started storing data for longer periods. This is like adding up new storage devices or using the cloud to store the data in whatever form the data was procured: documents, spreadsheets, databases, and HTML, etc. It is then arranged into proper formats using tools capable of processing huge chunks of Data.

NOTE: The scope of Big Data is not limited to the data you collect and store in your premises and cloud. It can include data from different other sources, including but not limited to items in the public domain.

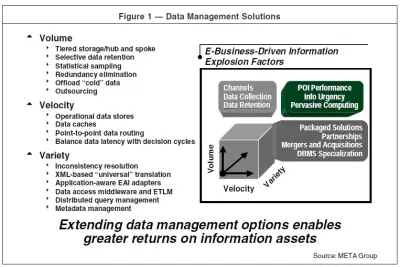

The 3D Model of Big Data is based on the following V’s:

- Volume: refers to the management of data storage

- Velocity: refers to the speed of data processing

- Variety: refers to grouping data of different, seemingly unrelated data sets

The following paragraphs explain Big Data modeling by talking about each dimension (each V) in detail.

A] Volume of Big Data

Talking about Big Data, one might understand volume as a huge collection of raw information. Though that is true, it is also about data storage costs. Important data can be stored on-premises and on the cloud, the latter being the flexible option. But do you need to store and everything?

According to a whitepaper released by Meta Group, when the volume of data increases, parts of data start looking unnecessary. Further, it states that only that volume of data should be retained which the businesses intend to use. Other data may be discarded or if the businesses are reluctant to let go of “supposedly non-important data”, they can be dumped on unused computer devices and even on tapes so that businesses do not have to pay for storing such data.

I used “supposedly unimportant data” because I, too, believe that data of any type can be required by any business in the future – sooner or later – and thus, it needs to be kept for a good amount of time before you know that the data is indeed non-important. Personally, I dump older data to hard disks from yesteryears and sometimes on DVDs. The main computers and cloud storage contain the data that I consider important and know that I will be using. Among this data too, there is a use-once kind of data that may end up on an old HDD after few years. The above example is just for your understanding. It won’t fit the description of Big Data as the amount is pretty less compared to what the enterprises perceive as Big Data.

B] Velocity in Big Data

The speed of data processing is an important factor when talking about big data concepts. There are many websites, especially e-commerce. Google has already admitted that the speed at which a page loads is essential for better rankings. Apart from the rankings, the speed also provides comfort to users while they shop. The same applies for data being processed for other information.

While talking about velocity, it is essential to know that it is beyond just higher bandwidth. It combines readily usable data with different analysis tools. Readily usable data means some homework to create structures of data that are easy to process. The next dimension – Variety, spreads further light on this.

C] Variety of Big Data

When there are loads and loads of data, it becomes important to organize them in a way that the analysis tools can easily process the data. There are tools for organizing data as well. When storing, the data can be unstructured and of any form. It is up to you to figure out its relationship with other data with you. Once you determine the relation, you can pick up appropriate tools and convert the data to the desired form for structured and sorted storage.

Summary

In other words, Big Data’s 3D Model is based on three dimensions: USABLE data that you possess, proper tagging of data, and faster processing. If these three are cared for, your data can readily be processed or analyzed to figure out your desires.

The above explains both concepts and the 3D model of Big Data. The articles linked in the second paragraph will provide additional support if you are new to the concept.

If you wish to add anything, please comment.