Do you want your data to stay private and never leave your device? Cloud LLM services often come with ongoing subscription fees based on API calls. Even users in remote areas or those with unreliable internet connections don’t specifically prefer cloud services. So what’s the solution?

Fortunately, local LLM tools can eliminate these costs and allow users to run models on their hardware. The tools also process data offline so that no external servers can access your information. You’ll also get more control over the interface specific to your workflow.

In this guide, we have gathered the free Local LLM Tools to fulfill all your conditions while meeting your privacy, cost, and performance needs.

Free tools to run LLM locally on Windows 11 PC

Here are some free local LLM tools that have been handpicked and personally tested.

- Jan

- LM Studio

- GPT4ALL

- Anything LLM

- Ollama

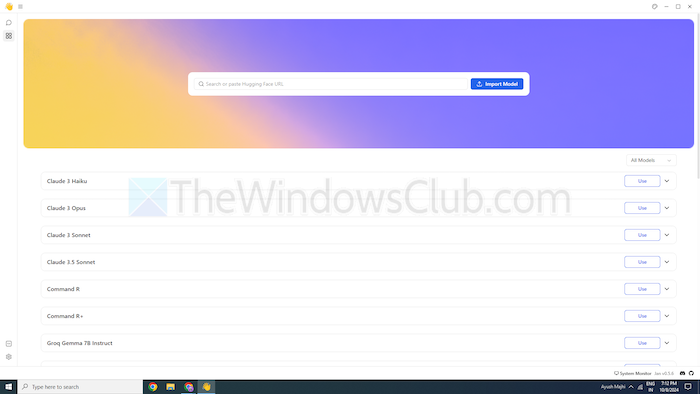

1] Jan

Are you familiar with ChatGPT? If so, Jan is a version that works offline. You can run it on your personal device without the internet. It lets you privately generate, analyze, and process text data on your local network.

It comes with top-notch models like Mistral, Nvidia, or OpenAI that you can use without sending data to another server. This tool is suitable if you prioritize data security and want a robust alternative to cloud-based LLMs.

Features

- Pre-Built Models: It provides installed AI models that are ready to use without additional procedures.

- Customization: Change the dashboard’s color and make the theme solid or translucent.

- Spell Check: Use this option to fix the spelling mistakes.

Pros

- Import your models using the Hugging Face source.

- It supports extensions for customization.

- Free of cost

Cons

- Less Community Support Jan lacks community support, and users may find fewer tutorials and resources.

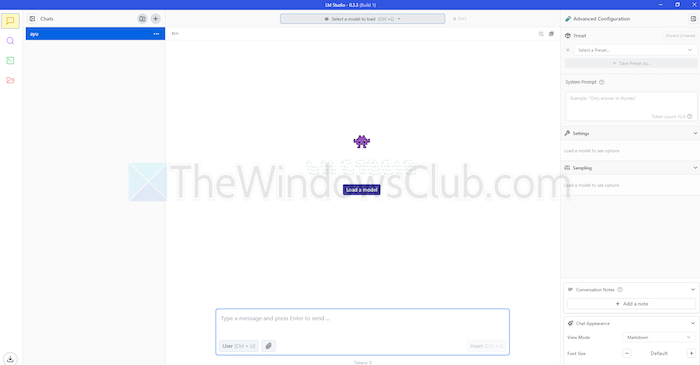

2] LM Studio

LM Studio is another tool for using language models, like ChatGPT locally. It offers large, enhanced models to understand and respond to your requests. But, unlike cloud-based models, you stay on your device. In other words, there is more privacy and control over its usage.

LM Studio can summarize texts, generate content, answer your desired questions, or even assist with coding, all from your machine. Before running a model, you can get a report on whether your system can handle it. This lets you spend your time and resources only on compatible models.

Features

- File Attachments and RAG: You can upload PDF, docx, txt, and CSV files under the chatbox and get responses accordingly.

- Range of Customization: It offers multiple color themes and lets you choose the complexity level of the interface.

- Resource dense: It offers free documentation and ways to learn and use the tool.

Pros

- You can use it on Linux, Mac, or Windows.

- Local server setup for developers.

- It offers a curated playlist of Models

Cons

- It may be complex to start working, specifically for newcomers.

3] GPT4ALL

GPT4ALL is another LLM tool that can run models on your devices without an internet connection or even API integration. This program runs without GPUs, though it can leverage them if available, which makes it suitable for many users. It also supports a range of LLM architectures, which makes it compatible with open-source models and frameworks.

It also uses llama.cpp as its backend for LLMs, which improves model performance on CPUs and GPUs without high-end infrastructure. GPT4ALL is compatible with both Intel and AMD processors; it uses GPUs for faster processing.

Features

- Local File Interaction: Models can query and interact with local files. Like PDFs or text documents, using Local Docs.

- Efficient: Many models are available in 4-bit versions, which uses less memory and processing.

- Extensive Model Library: GPT4ALL has over 1000 open-source models from repositories like Hugging Face.

Pros

- Open-source and Transparent

- It offers a specific package for enterprises to use AI locally.

- GPT4ALL strongly focuses on privacy

Cons

- Limited Support for ARM Processors such as Chromebooks

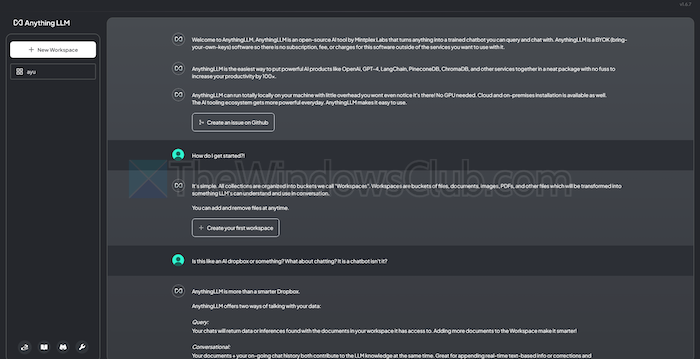

4] AnythingLLM

AnythingLLM is an open-source LLM that offers high customization and a private AI experience. It lets users deploy and run LLMs offline on their local devices, such as Mac, Windows, or Linux, ensuring complete data privacy.

Moreover, the tool will best suit single users who want an easy-to-install solution with minimal setup. You can treat it as a private CharGPT-like system that businesses or individuals can run.

Features

- Developer-Friendly: It has a complete API for custom integration.

- Tool Integration: You can integrate additional tools and generate API keys.

- Easy Setup: It has a single-click installation process.

Pros

- Flexibility in LLM usage

- Document Centric

- The platform has AI agents to automate tasks

Cons

- Lacks Multi-user support

- Complexity in advanced features

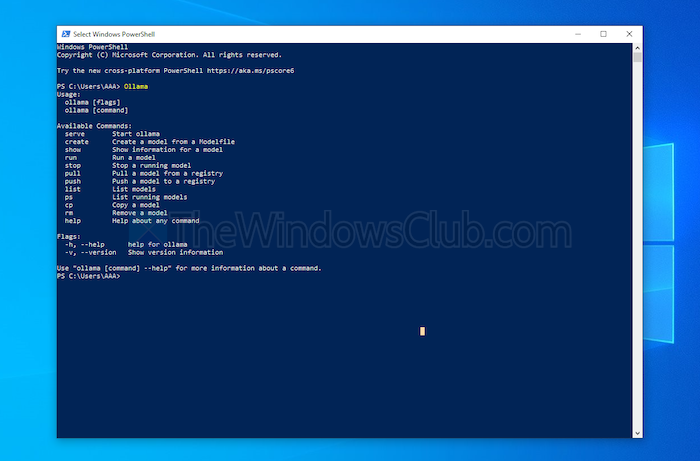

5] Ollama

Ollama gives full control of creating local chatbots without API. Currently, it has the most significant contributors who provide frequent updates and improve the overall functionality of GitHub. This updates this tool and provides better performance than others. Unlike the other Tools discussed above, it opens a terminal interface to install and launch a model.

Each model you install has its own configurations and weights, avoiding conflicts with other software on your machine. Along with its command line interface, Ollama has an API compatible with OpenAI. You can easily integrate this tool with one that uses OpenAI models.

Features

- Local Deployment: Ollama lets you run large language models offline, such as Llama, Mistral, or others.

- Model Customization: Advanced users can set behavior in models using a Modefile.

- OpenAI API Compatibility: It has a REST API compatible with OpenAI’s API.

- Resource Management: It optimizes CPU and GPU usage, not overloading the system.

Pros

- You can get a collection of models.

- It can import models from open-source libraries such as PyTorch.

- Ollama can integrate with tremendous library support

Cons

- It doesn’t provide a Graphic User Interface

- Requires large storage requirements

Conclusion

In summary, local LLM tools offer a worthy alternative to cloud-based models. They offer next-level privacy and control at no cost. Whether you aim for ease of use or customization, the tools listed offer a variety of needs and expertise levels.

Depending on your needs, such as processing power and compatibility, any of these can leverage AI’s potential without compromising privacy or requiring subscription fees.

Leave a Reply