If you looking to install an LLM model on your computer, there are various options, you can get MSTY LLM, GPT4ALL, and more. However, in this post, we are going to talk about a Gemini-powered LLM model, Gemma 3. So, if you want to install Gemma 3 LLM on Windows, this guide is for you.

Install Gemma 3 LLM on Windows 11/10 PC

Before we install Gemma 3, it is important to know that there are several variants of this model. We have listed them below and recommend that you go through them once to make an informed decision.

- Gemma3:1B: A compact model optimized for lightweight tasks and quick responses, suitable for devices with limited resources.

- Gemma3:4B: A mid-range model offering balanced performance and efficiency, ideal for versatile applications.

- Gemma3:12B: A powerful model tailored for complex reasoning, coding, and multilingual tasks across various domains.

- Gemma3:27B: The largest and most advanced version, excelling in high-capacity tasks with enhanced reasoning, multimodal capabilities, and a 128k-token context window.

Use one of the following methods to install Gemma 3 LLM on a Windows 11 PC.

- Using Ollama

- Using LM Studio

- Using Google AI Studio

Let us discuss them in detail.

1] Using Ollama

Ollama is a platform designed to simplify the use of large language models (LLMs) like Llama 3.3, Gemma 3, and others. It allows users to run these models locally on devices such as macOS, Linux, and Windows. We are going to use it to install Gemma 3.

To do so, follow the steps mentioned below.

- First of all, navigate to ollama.com.

- Since it is for Windows, download and run the Windows installer to complete the installation.

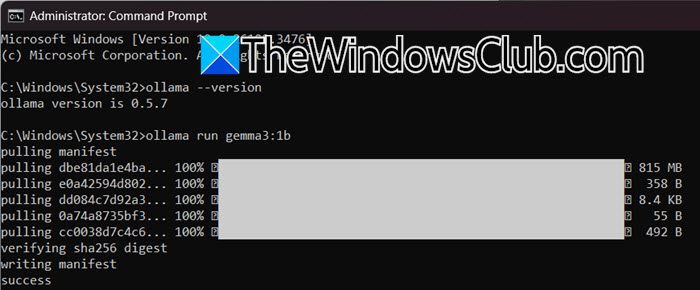

- Open Command Prompt and run the following command to check if it is installed.

ollama --version

- Now, you need to run one of the following commands depending on the version of Ollama you want to download.

ollama run gemma3:1b ollama run gemma3:4b ollama run gemma3:12b ollama run gemma3:27b

- After the completion of the download process, you need to run the following command to install it.

ollama init gemma3

That is how you can install Gemma 3 using Ollama.

There are a lot of things you can try doing if you have Gemma 3, you can run: ollama query gemma3 <Input>

If you want to do something related to images, you can use the option –image. For example: ollama query gemma3 –image “location-of-the-image.jpg”.

That is how you can install Gemma 3 using Ollama.

2] Using LM Studio

LM Studio is a program that allows you to run LLM or Large Language Models locally on your computer. It supports models like Llama, DeepSeek, and Phi, allowing users to interact with them offline. The platform offers features such as a chat interface, local server capabilities, and compatibility with OpenAI-like endpoints. We are going to use it to install Gemma 3. To do so, first, you need to install LLM Studio, for that, go to lmstudio.ai and download the tool for Windows. Now, go to the Download folder and run the program to install LLM Studio on your computer.

Once LLM Studio is installed, follow the steps mentioned below to install Gemma 3.

- Open LLM Studio.

- Click on the magnifying glass icon labeled as Discover.

- Search for “Gemma 3”, there will be various Gemma 3 models, hopefully, don’t just randomly select one, click on the model, then if it says “Likely too large for this machine” or any other message discouraging you from installing the model, you can still install it, but it always recommended to choose the model that is compatible with your system.

- In my case, Gemma 3 1B was compatible, I selected it and clicked on Download.

Once the download process is completed, either click on Load Model or open a new chat by clicking on the + icon next to Chat, and in the Select a model to load drop-down, pick Gemma 3.

This way you can install Gemma 3 using LM Studio.

3] Using Google AI Studio

Last but not least, let us use the Google AI Studio. It is an online platform that allows you to use multiple AI Models including Gemini and Gemma 3. In order to use it, we recommend you go to aistudio.google.com. Then, from the right section of the screen, under Models, you need to select Gemma 3. Once done, you can start chatting with the AI Model.

Hopefully, you are comfortable with one of the methods mentioned here and can nonchalantly enjoy Gemma 3.

Read: How to run DeepSeek locally on Windows 11

How to install Visual Studio in Windows 11?

To install Visual Studio in Windows 11, you need to go to visualstudio.microsoft.com. Now, click on the Download icon and download the Installation Media. Finally, you can run the installation media, and follow the on-screen instructions to complete the process.

Read: How to use DeepSeek in Cursor AI

How do I install Gemma 3 LLM on Windows 11 PC?

There are three ways to install, Ollama, where you run commands like ollama init gemma3; LM Studio, downloading compatible models via its interface; or Google AI Studio, accessing Gemma 3 online at aistudio.google.com. Each method caters to different preferences – local installation or cloud-based interaction – ensuring flexibility for various use cases.

Also Read: Use DeepSeek V3 Coder in Windows 11.