If you want to learn how to use DeepSeek V3 Coder in Windows 11, this post will guide you. DeepSeek-V3 Coder is a specialized version of the DeepSeek-V3 model. It leverages natural language processing and advanced machine learning techniques to understand and generate code, provide programming assistance, and help users with software development tasks.

How to use DeepSeek V3 Coder in Windows 11?

DeepSeek V3 Coder is for those looking to improve their coding skills or streamline their software development process. If you want to use DeepSeek V3 Coder in Windows 11, you may access it through the online demo platform, API service, or download the model weights for local deployment. Let us see how.

1] Access DeepSeek-V3 Coder via Web Browser

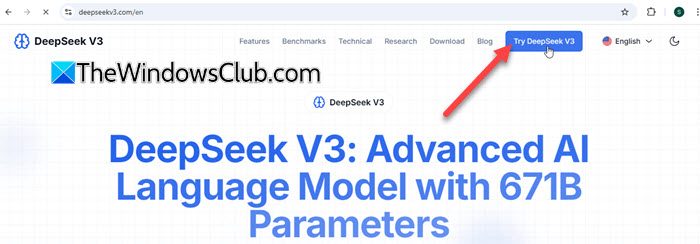

Launch your favorite browser, type www.deepseekv3.com in the URL bar, and press Enter. You’ll be redirected to DeepSeek’s official website. Click the ‘Try DeepSeek V3‘ button in the top-right corner.

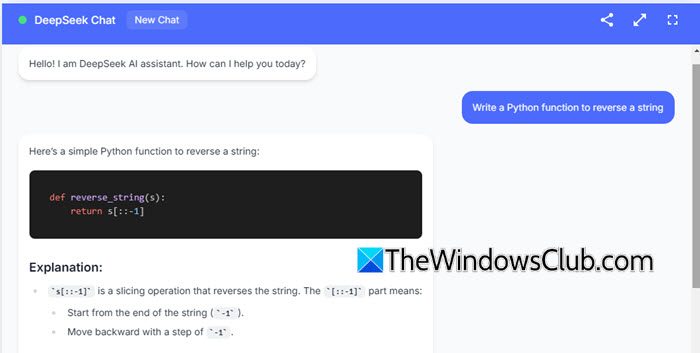

You’ll see DeepSeek’s chat interface. Type your query in the chat input box and press Enter. DeepSeek V3 will quickly generate a response for you.

Register with DeepSeek to gain access to premium features or advanced options, such as saving chat history or customizing preferences.

Type chat.deepseek.com in the URL bar of your browser and press Enter. Next, sign up for a DeepSeek account. Upon login, you’ll see a chat interface based on the latest DeepSeek-V3 model. You may use the interface to input your coding queries, generate code, or debug programs.

2] Access DeepSeek-V3 Coder via API

To access the DeepSeek-V3 model via API on Windows 11, follow these steps:

Register for an account on the DeepSeek platform to receive your API key.

Download and install Python from python.org, if not already installed. During installation, ensure you check the box for Add python.exe to PATH (if Python is added to the PATH, you can simply type python or pip in any terminal window, and the system will know where to find the Python interpreter or package manager. Without adding Python to the PATH, you would need to navigate to the specific directory where Python is installed, every time you want to run a Python command).

Next, install the appropriate SDK.

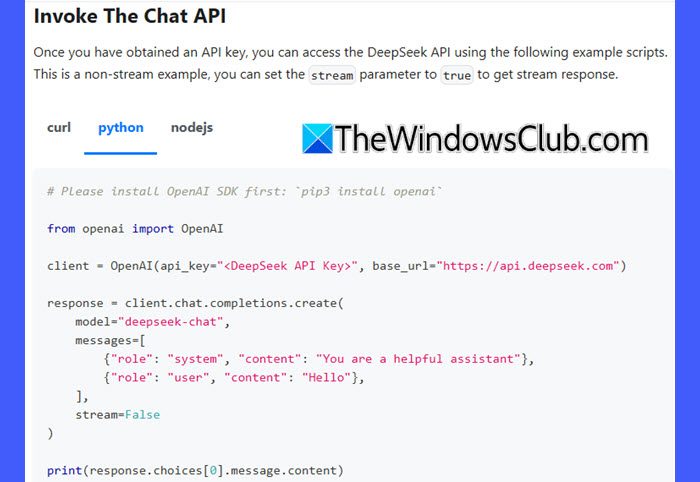

DeepSeek API uses an OpenAI-compatible API format, so you may access the DeepSeek API using the OpenAI SDK or any OpenAI API-compatible software. To install the OpenAI SDK, open Command Prompt and execute the following command:

pip install openai

Once your development environment is set up, configure the API access while setting the base URL to:

'https://api.deepseek.com'

Then access the DeepSeek V3 model by making API calls. Here’s a Python example to interact with the DeepSeek V3 model:

from openai import OpenAI

client = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")

response = client.chat.completions.create(

model="deepseek-chat",

messages=[

{"role": "system", "content": "You are a helpful assistant"},

{"role": "user", "content": "Hello"},

],

stream=False

)

print(response.choices[0].message.content)

Note:

- The model name

deepseek-chatwill invoke DeepSeek V3. - Enable streaming by setting

'stream=true'. Streaming is ideal for real-time response scenarios.

Read: How to run DeepSeek locally on Windows 11

3] Access DeepSeek-V3 Coder via Local Deployment

Deploying DeepSeek V3 locally involves downloading the model weights and setting up the necessary environment. However, there isn’t an official DeepSeek V3 document specifically tailored for deploying the model locally on Windows 11. The available deployment guides primarily focus on Linux environments, particularly Ubuntu 20.04 or higher.

For local deployment of DeepSeek-V3 Coder on Windows 11, you may create a Linux-like environment within your Windows system.

Before proceeding, make sure your system meets these minimum hardware and software requirements:

- GPU: NVIDIA GPU with CUDA support (e.g., RTX 30xx series or higher).

- Python: Version 3.8 or higher.

- Memory: At least 16GB RAM (32GB recommended).

- CUDA and cuDNN: Install the versions compatible with the DeepSeek V3 dependencies.

Now follow these steps:

Install Windows Subsystem for Linux 2 on your Windows 11 PC.

Clone the DeepSeek V3 Repository:

git clone https://github.com/deepseek-ai/DeepSeek-V3.git

Navigate to the inference directory and install the dependencies listed in requirements.txt:

cd DeepSeek-V3/inference pip install -r requirements.txt

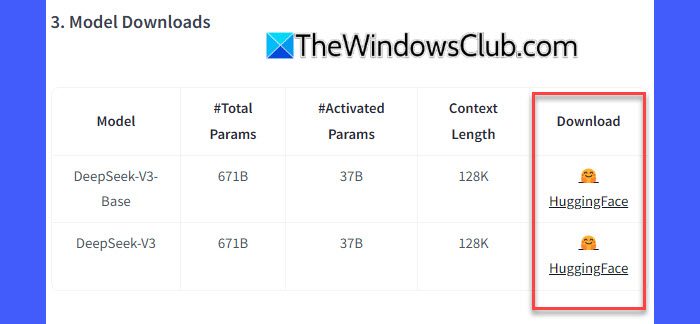

Next, download the model weights from HuggingFace and put them into /path/to/DeepSeek-V3 folder.

Next, convert the model weights to a specific format:

python convert.py --hf-ckpt-path /path/to/DeepSeek-V3 --save-path /path/to/DeepSeek-V3-Demo --n-experts 256 --model-parallel 16

Now you can chat with DeepSeek-V3 or batch inference on a given file.

That’s it! I hope you find this useful.

Read: Best AI Code Generator Assistants for VS Code.

Is DeepSeek free?

DeepSeek provides free access to certain models, allowing users to experience their capabilities without immediate cost. Other models operate on a paid basis, with costs determined by usage. The latest model, DeepSeek-V3, has a pricing structure of $0.14 per million input tokens and $0.28 per million output tokens (there’s an ongoing discount on DeepSeek-V3 pricing until February 8, 2025).

TIP: See this post if DeepSeek Registration is not working and you see Registration may be busy message.

What GPU do you need for DeepSeek Coder V2?

Deploying DeepSeek-Coder-V2 requires substantial GPU resources due to its large model size and complexity. For inference in BF16 (bfloat16) format, the model necessitates 8 GPUs, each equipped with 80 GB of memory.

Read Next: Best Screenshot-to-Code AI tools.

Leave a Reply