NVIDIA Chat with RTXI AI Chatbot has bought a new frontier in user interaction. Designed to make things easier for the users, this innovative tool uses artificial intelligence and NVIDIA’s RTX technology to provide one’s own AI assistant. In this article, we will explore the ins and outs of using NVIDIA Chat with RTX AI Chatbot on the Windows PC and unlock its full potential.

What is NVIDIA chat with RTX?

Chat with RTX is a technological demo that uses an NVIDIA GeForce RTX 30 series GPU or a superior model with a minimum of 8GB of VRAM to empower users with a customized chatbot. If you are wondering about the difference between ChatGPT and Chat with RTX, then the latter is an AI chatbot that runs on TensorRT-LLM and RAG while the former works on GPT architecture.

What TensorRT-LLM does is generate responses quickly and allow for greater customization of responses, and with RAG (Retrieval -Augmented Generation), users can expect the Chatbot to not only generate responses but also retrieve information from a knowledge base or external sources whereas ChatGPT lacks these features.

Use NVIDIA Chat with RTX AI Chatbot on Windows PC

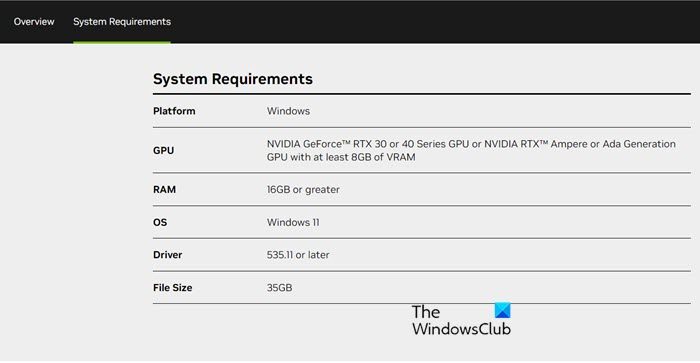

NVIDIA Chat with RTX has its prerequisites such as an RTX GPU, 16 GM RAM, 100GB storage, and Windows 11, however, ChatGPT can be operated on various platforms. You can go to nvidia.com and check out the system requirements.

Once all the prerequisites are met, let’s get on to install the app, and here’s how to do it:

- Before going for the download, ensure that the Internet is stable, and then download the Chat with RTX ZIP file from nvidia.com by clicking the Download Now button.

- Now, right-click on the file and extract it. Now go to the setup file, and double-click to launch it.

- Follow all the prompts commands and check all the boxes, followed by pressing the Next button. This will ensure automatic download and installation of LLM along with all the necessary dependencies.

Once the installation is completed, click the Close button, and voila! you are now all good to start setting up the app.

Use NVIDIA Chat with RTX

Once the installation process is completed, it is time to customize and utilize the feature. While Chat with RTX can function as a standard online AI chatbot, we are also going to explore its RAG feature. This allows us to tailor the response according to the access that we grant for particular content, enhancing the versatility, here’s how to do it:

- Form a RAG folder

- Set up the environment

- Start firing up the inquiries

Let’s talk about them in detail.

1] Form a RAG folder

To form a RAG folder, follow the steps mentioned below:

- First and foremost, create a new dedicated folder to organize files for RAG analysis.

- Now, fill up the folder with the data files that wish to be analyzed by the AI. This includes a diverse range of topics and file types such as documents, PDFs, text, and videos. However, we recommend discretion in file quantity to maintain optimal performance.

This will act like a database from where your ChatRTX will take input.

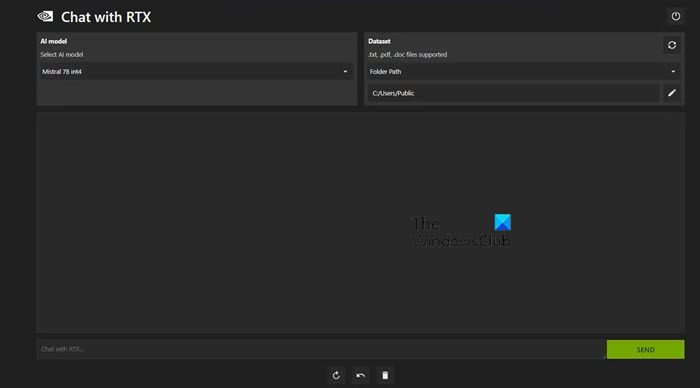

2] Set up the Environment

After the initial process is set up, go to the Dataset tab, go to the Folder Path option, and then click on the Edit icon. Now select the folder that you want the AI to read, along with the AI model. Once the database is organized, utilize the AI to address inquiries and requests effectively.

Read: How to Install Gemini AI in Windows?

3] Fire up the AI with the inquiries and requests

After all the practical stuff, we can now ask our queries and requests from the Chat with RTX. We can either use it as a Regular AI Chatbot or as a personal AI assistant. The former will respond to the queries while the latter can retrieve accurate schedule data from a PDF file. However, it’s only possible if we maintain data files and calendar dates up-to-date.

Additionally, Chat with RTX’s RAG feature can also summarize legal; documents, generate code for programming tasks, extract key points for videos, and analyze YouTube videos by simply pasting the video URL into the designated files.

Read: How to use Chai AI app on Windows PC?

Can you use a chatbot with an RTX GPU?

Yes, Chatbots can utilize an RTX GPU for improved performance and efficiency. An RTX GPU accelerates tasks related to Natural Language Processing (NLP), can handle larger datasets, run more complex models, and deliver faster responses. Additionally, RTX GPUs can facilitate tasks such as real-time speech recognition and generation, further enhancing the conversational capabilities of a chatbot.

Also Read: Best Free Artificial Intelligence software for Windows .

Leave a Reply